Utility AI Final Year Research & Development Projects

- Parsa Arashmehr

- Apr 17, 2025

- 3 min read

Updated: Aug 13, 2025

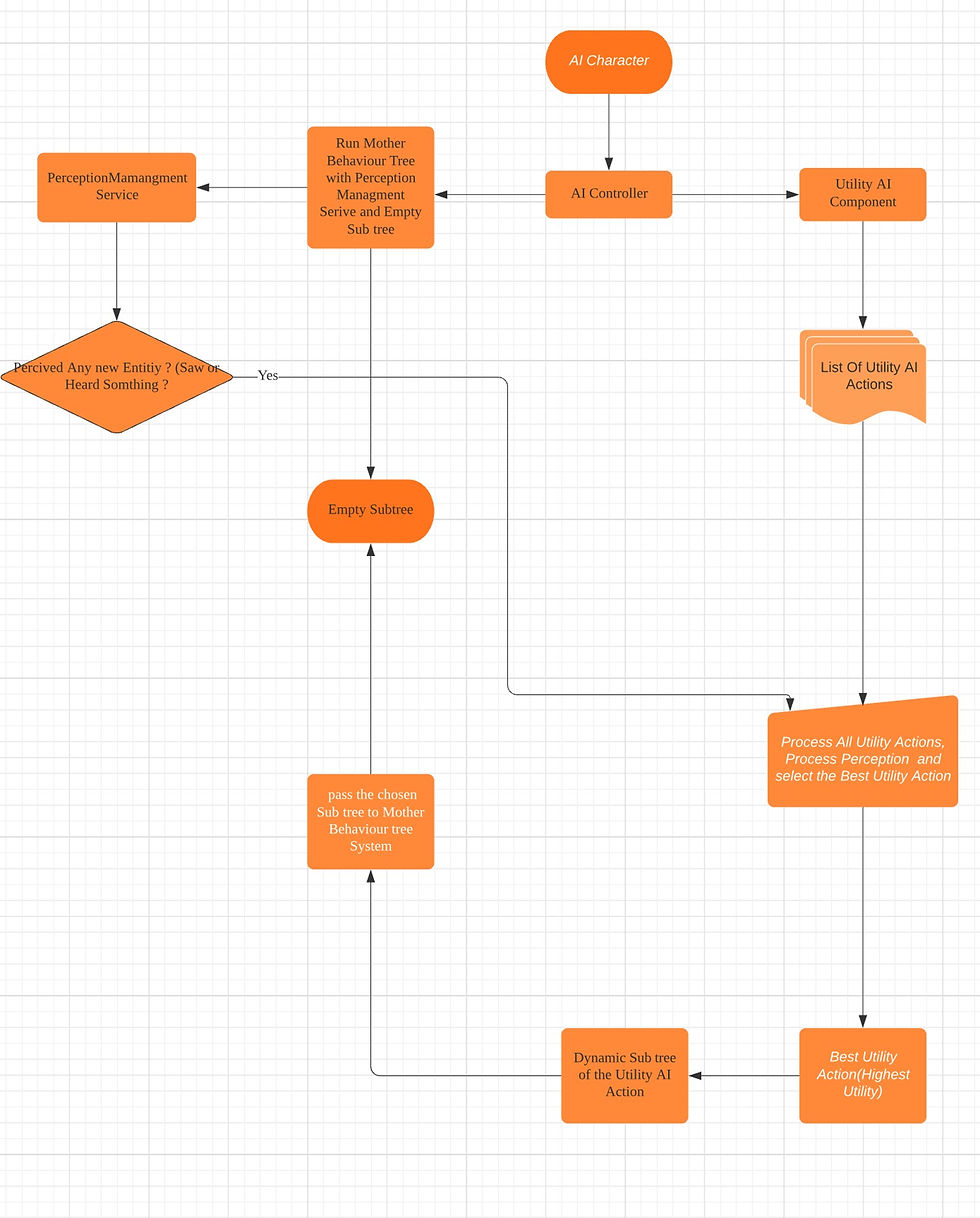

In my final year project at APU, I did extensive research on utility theory using Unreal Engine. I created a framework for AI agents that employs Score-based Utility AI. This framework can be integrated with behavior trees for AI agents, enabling the development of precise and emergent AI behaviors.

What is Utility Theory :

Utility theory is a score-based decision making system which uses scores as a priority for each possible action. Similar to behavior trees ,Utility theory is a priority based system however the priority is determined by the score of each task , and the scores are Simultaneously calculated together.

Utility Tasks (Actions) : Each AI agent has multiple utility tasks assigned to them. each task is basically what an AI can do E.g: moving task ,attacking task, healing task hiding tasks , etc

Utility Scores :

Each task has a function called "utility score", which is ticking as long as the preconditions for that task are active. For example, if the character "can move" (preconditions : not falling, not jumping, etc), the "moving task" can tick and call the utility score function on each frame. The utility score function returns a float value called "score," which is the priority of that task. Thus, the task with the highest score is selected as the highest priority action and should be executed by the framework.

Fuzzy Logic :

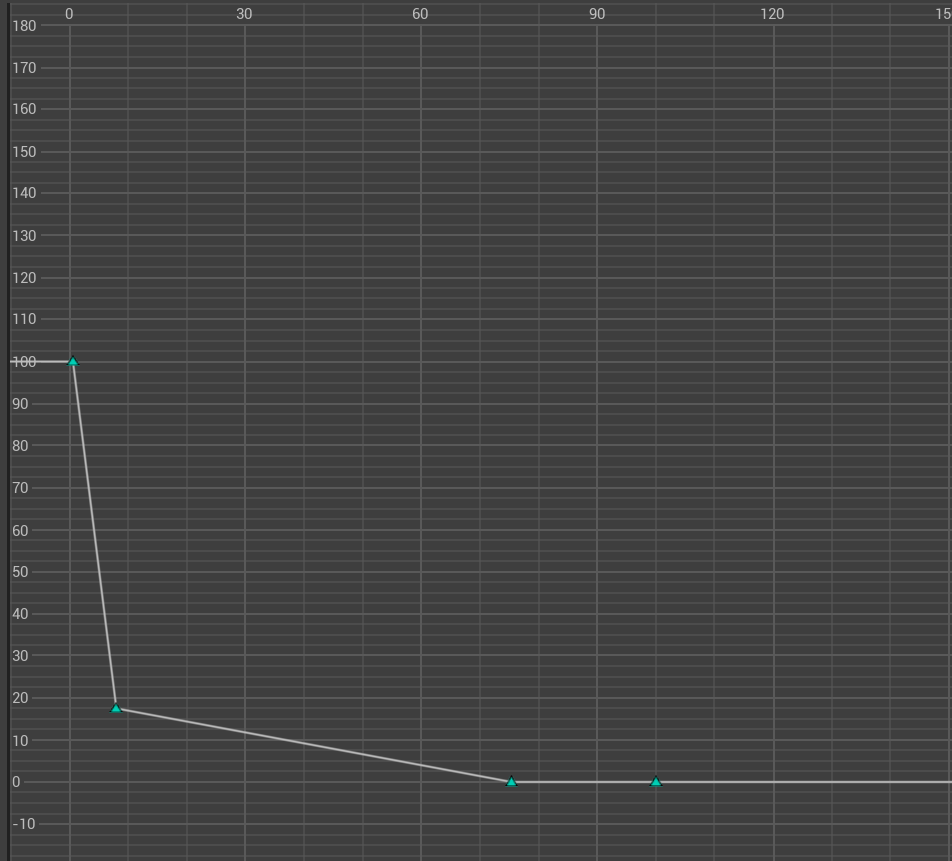

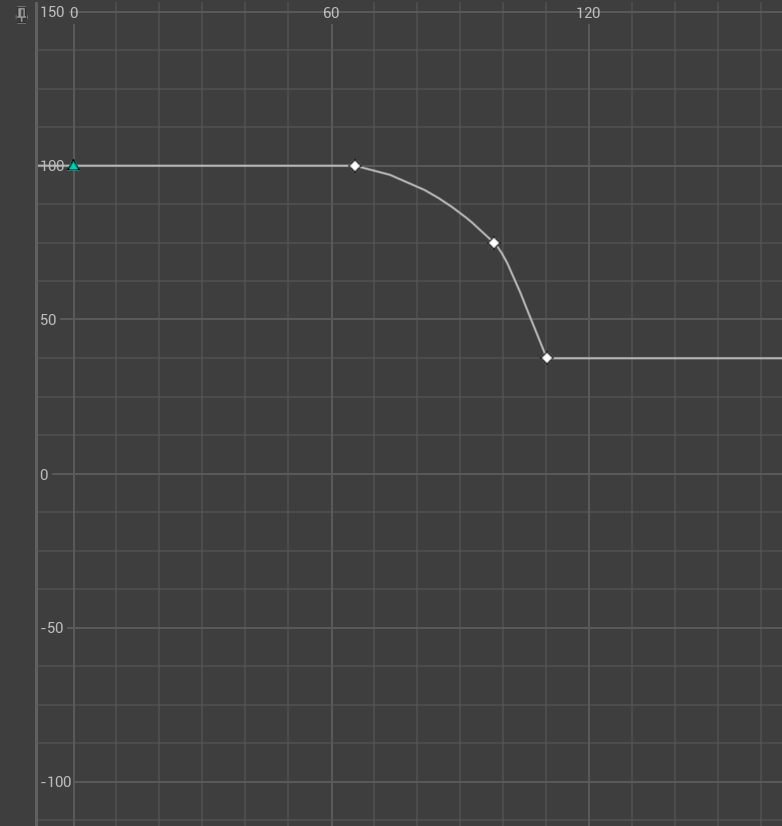

The key part of the system is how to determine the utility score for each task (action). For instance, the AI has access to some factors like "distance to enemy," "visibility," "navigability," "availability of items," and "health." We can combine these values with data-driven implementations (like "float curves" and " custom curves") as "weights" to the agents' preferences and simulate a sense of behavior. For instance, if the AI is a "tough guy," the "escaping task" has a very low weight modifier, and the AI has a low tendency to escape. On the other hand, if the AI is a "coward," the "escaping task" has a higher weight.

As you can see in the graphs above, a "brave agent" doesn't escape the conflict until the health is really low. On the other hand, a "coward agent" has more tendency to escape as soon as the health reaches 60%. Using the curves and data assets, we can easily modify the agents' behavior and give them a "character."

This framework is very flexible, all you have to do is add the Utility AI component to your AI controller and setup the AI task. the system can be combined with other systems such as behavior trees , EQS or state machines or even can be used as an independent AI framework.

Practical Examples : Hide and Seek Scenario My utility agent has access to different parameters, like "Target visibility," "Distance to target," "Own HP," "enemy is reloading/healing Booleans"," target predicted location" etc. and decides the best action based on the utility score of all actions Therefore, the result is more accurate and human-like behavior.

Enemy Combat planning

In some scenarios, the AI agent decides to pick a better weapon or look for extra ammo before engaging the target. This emergent behavior is a byproduct of the Utility AI system, which tells the agent that the most prior task at the moment is finding ammo, not attacking the target.

Comments